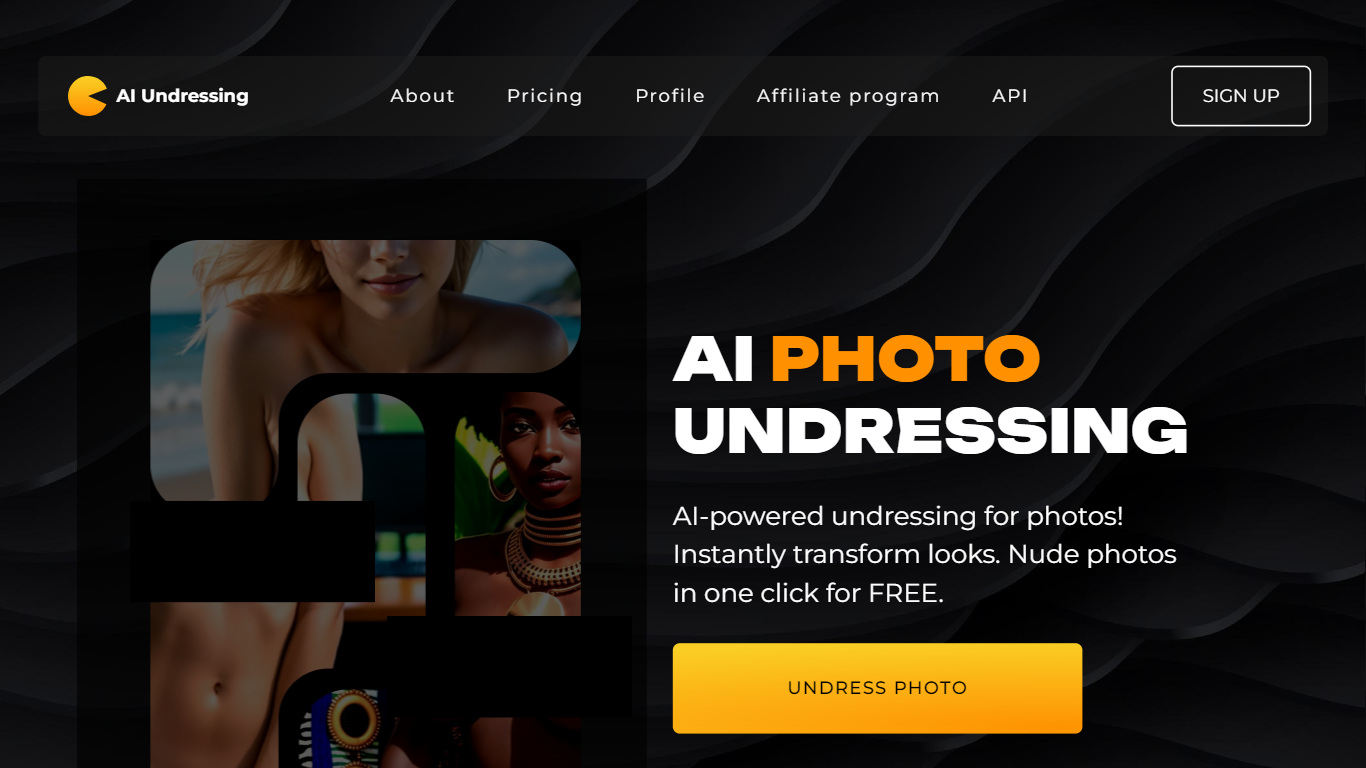

The concept of a “undress AI remover” is the word for your dubious plus fast surfacing class of man made data gear intended to electronically clear away attire out of illustrations or photos, normally offered when fun and also “fun” photo publishers. When you’re free undress ai remover , these know-how may look like an off shoot with simple photo-editing revolutions. Having said that, underneath the work surface can be found your bothersome honourable situation as well as prospects for intense maltreatment. All these gear normally apply profound knowing styles, just like generative adversarial cpa affiliate networks (GANs), taught for datasets including individuals our bodies so that you can sensibly simulate thats a human being could mimic without the need of clothes—without its awareness and also come to an agreement. When this will sound like scientific research fiction, the truth is that these apps plus website expert services come to be ever more attainable to your consumer, bringing up red flags concerning electric protection under the law activists, congress, as well as much wider network. A availability of these program so that you can just about anyone with your smartphone and also internet connection frees right up troublesome prospects to get wrong use, like revenge mature, nuisance, as well as breach with very own personal privacy. Further, a great number of towers loss transparency about precisely how the feedback is definitely procured, placed, and also made use of, normally skipping legalised reputation by way of performing around jurisdictions by using lax electric personal privacy guidelines.

All these gear exploit stylish algorithms which will add vision interruptions by using created points based upon shapes around large photo datasets. When striking originating from a manufacturing point of view, a wrong use possibilities is definitely positively great. Final results can happen shockingly genuine, further more blurring a set amongst what the heck is serious plus what the heck is imitation while in the electric community. Subjects of gear will dsicover re-structured illustrations or photos with ourselves becoming more common internet, confronting unpleasantness, panic, or even just trouble for its employment plus reputations. The following adds within aim problems adjacent come to an agreement, electric wellbeing, as well as assignments with AI web developers plus towers this allow for all these gear so that you can proliferate. What’s more, there’s often a cloak with anonymity adjacent a web developers plus recruits with undress AI removers, building control plus enforcement a strong uphill war to get experts. Consumer knowledge around this dilemma remains to be very low, which will exclusively fuels it has the pass on, when people today do not have an understanding of a seriousness with spreading or even just passively joining by using these re-structured illustrations or photos.

A social implications will be outstanding. Gals, specially, will be disproportionately aimed by way of these know-how, defining it as a further resource while in the undoubtedly sprawling strategy with electric gender-based violence. Sometimes in situations where a AI-generated photo will not be embraced greatly, a mental health affect on whomever portrayed is often rigorous. Just simply being aware of such an photo is accessible is often sincerely extremely troubling, primarily considering extracting subject material online is nearly out of the question one time it is often distributed. Individuals protection under the law supports disagree this these gear will be effectively searching for method of non-consensual pornography. Around effect, one or two governments currently have going taking into account guidelines so that you can criminalize a construction plus circulation with AI-generated particular subject material without the presence of subject’s come to an agreement. Having said that, rules normally lags a long way regarding a stride with know-how, abandoning subjects insecure and they often without the need of legalised recourse.

Computer providers plus practical application suppliers as well play a role around frequently this enables and also stopping a pass on with undress AI removers. If all these apps will be made possible for general audience towers, people get authority plus arrive at your wider visitors, quick grown timbers . detrimental design of their total apply scenarios. Quite a few towers currently have began consuming measures by way of banning specified key words and also extracting well-known violators, nonetheless enforcement remains to be sporadic. AI web developers needs to be organised in charge but not only for any algorithms people make but in addition the best way all these algorithms will be handed out plus made use of. Ethically sensible AI usually means using built-in defends to circumvent wrong use, like watermarking, prognosis gear, plus opt-in-only models to get photo tricks. The fact is that, in the current environment, gain plus virality normally override values, especially when anonymity shields inventors out of backlash.

A further surfacing dilemma is a deepfake crossover. Undress AI removers is often in addition to deepfake face-swapping gear to set-up absolutely fabricated grown-up subject material this would seem serious, even if whomever needed under no circumstances had section around it has the construction. The following offers your part with deception plus complexity which make it more complicated so that you can establish photo tricks, specifically the average person without the need of admission to forensic gear. Cybersecurity industry experts plus internet wellbeing institutions can be pressuring to get improved instruction plus consumer discourse for all these modern advances. It’s fundamental generate usual online customer cognizant of the best way without difficulty illustrations or photos is often re-structured as well as incredible importance of canceling these infractions every time they will be come across internet. Also, prognosis gear plus alter photo google will have to change so that you can flag AI-generated subject material extra dependably plus tell people today if perhaps its similarity is misused.

A mental health cost for subjects with AI photo tricks is definitely a further element this is deserving of extra aim. Subjects could are afflicted with panic, melancholy, and also post-traumatic worry, and most facial area challenges trying to get aid as a consequence of taboo plus unpleasantness adjacent the difficulty. You’ll find it is affecting rely upon know-how plus electric gaps. If perhaps people today get started fearing this every photo people promote can be weaponized from these folks, it can stifle internet concept plus generate a chilling effects on advertising and marketing participation. This is certainly primarily detrimental to get little those unfortunates who are continue to knowing ways to steer its electric identities. Institutions, dad and mom, plus educators has to be part of the dialog, equipping newer a long time by using electric literacy plus knowledge with come to an agreement around internet gaps.

Originating from a legalised point of view, present-day guidelines in a great many locations are usually not furnished to touch the following innovative method of electric problems. While locations currently have enacted revenge mature rules and also guidelines from image-based maltreatment, very few currently have mainly sorted out AI-generated nudity. Legalised industry experts disagree this aim ought not to be a common look at analyzing lawbreaker liability—harm prompted, sometimes unintentionally, will need to transport results. Also, there needs to be healthier collaboration amongst governments plus computer providers to set standardised tactics to get distinguishing, canceling, plus extracting AI-manipulated illustrations or photos. Without the need of systemic measures, persons are kept so that you can attack a strong uphill struggle with minimal safeguards and also recourse, reinforcing cycles with exploitation plus silence.

Quick grown timbers . dimly lit implications, you can also get clues with anticipation. Investigators will be producing AI-based prognosis gear which will establish manipulated illustrations or photos, flagging undress AI outputs by using great correctness. All these gear think you are integrated into advertising and marketing small amounts models plus technique plug ins to support buyers establish suspicious subject material. On top of that, advocacy categories will be lobbying to get stricter foreign frameworks that comprise AI wrong use plus build more lucid customer protection under the law. Instruction is likewise growing in number, by using influencers, journalists, plus computer critics bringing up knowledge plus sparking vital chats internet. Transparency out of computer agencies plus amenable discussion amongst web developers as well as consumer will be significant tips for establishing a strong online this safe guards rather then intrusions.

Excited, one of the keys so that you can countering a peril with undress AI removers is based on your united front—technologists, congress, educators, plus on a daily basis buyers performing along to set area on the will need to plus shouldn’t often be attainable by using AI. There needs to be your societal move about for which electric tricks without the need of come to an agreement is actually a really serious felony, not much of a tale and also nuisance. Normalizing admire to get personal privacy around internet settings is just as vital when establishing improved prognosis models and also crafting innovative guidelines. When AI is constantly on the change, world need to ensure it has the growth behaves individuals dignity plus wellbeing. Gear which will undress and also violate your person’s photo should never often be aplauded when wise tech—they really should be ruined when breaches with honourable plus very own area.

In the end, “undress AI remover” just isn’t your cool and trendy keyword and key phrase; it’s just a warning sign with the best way technology is often misused if values will be sidelined. All these gear depict your damaging intersection with AI electricity plus individuals irresponsibility. Once we take a position to the edge with far more robust image-generation modern advances, them results in being significant so that you can check with: Just because we will find something to help, will need to most people? The right formula, in regards to violating someone’s photo and also personal privacy, needs to be your resounding virtually no.